‘I was just holding a Doritos bag’: AI mistake leads to armed police confrontation with Baltimore teen

Baltimore’s Kenwood High School faces outrage after a false AI alert led to a dangerous encounter for 16-year-old Taki Allen.

Baltimore’s Kenwood High School faces outrage after a false AI alert led to a dangerous encounter for 16-year-old Taki Allen.

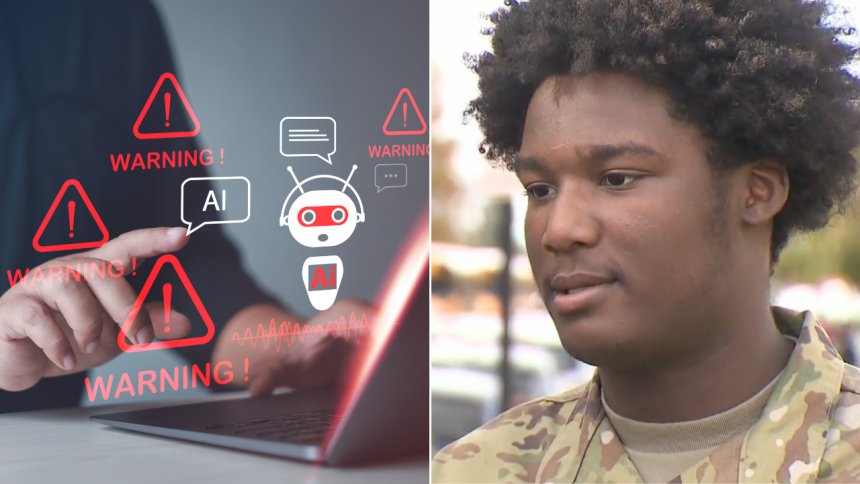

AI, or Artificial Intelligence, has slowly cemented its place in society through its ability to create hyper-realistic deep fake videos, songs, pictures, gather information in record time, and assist with daily tasks. However, beyond the funny AI trends on social media, law enforcement is also using the technology. While some could see this evolution as revolutionary, just as AI platforms flag that they make errors, these systems can cause errors in law enforcement that could sometimes damage the communities these officers are supposed to protect and serve.

This week, in Baltimore, Maryland, 16-year-old Taki Allen was approached by officers with guns drawn, handcuffed, and searched because an AI system mistook his hand holding a bag of chips for a weapon. While sitting outside Kenwood High School waiting to be picked up, Allen was eating a bag of Doritos. Twenty minutes after finishing the chips, the high school student was confused when a flock of police officers approached him and his friends with their guns drawn.

“It was like eight cop cars that came pulling up for us. At first, I didn’t know where they were going until they started walking toward me with guns, talking about, ‘Get on the ground,’ and I was like, ‘What?'” Allen told WBAL-TV 11 News.

The 16-year-old continued: “They made me get on my knees, put my hands behind my back, and cuffed me. Then, they searched me and they figured out I had nothing. Then, they went over to where I was standing and found a bag of chips on the floor. I was just holding a Doritos bag — it was two hands and one finger out, and they said it looked like a gun.”

Officers later informed Allen that the way he was holding the bag of chips triggered the school’s AI gun detection system, Omnilert, which automatically alerted law enforcement and the school’s administration.

“The program is based on human verification, and in this case the program did what it was supposed to do which was to signal an alert and for humans to take a look to find out if there was cause for concern in that moment,” Superintendent Dr. Myriam Rogers told WMAR 2 News.

However, Allen, like many parents who learned about the incident later, is not convinced and no longer feels safe in one of the few places children should feel safe.

“I don’t think no chip bag should be mistaken for a gun at all,” Allen explained. “I was expecting them [Kenwood High School administrators] to at least come up to me after the situation or the day after, but three days later, that just shows like, do you really care or are you just doing it because the superintendent called me. Now, I feel like sometimes after practice I don’t go outside anymore. Cause if I go outside, I don’t think I’m safe enough to go outside, especially eating a bag of chips or drinking something. I just stay inside until my ride comes.”

“Nobody wants this to happen to their child. No one wants this to happen,” Allen’s grandfather, Lamont Davis, added.

Though the school’s principal Kate Smith has reportedly not reached out to Allen, she did send a letter to parents “ensuring the safety of our students and school community is one of our highest priorities.”

“We understand how upsetting this was for the individual that was searched as well as the other students who witnessed the incident. Our counselors will provide direct support to the students who were involved in this incident and are also available to speak with any student who may need support, the letter also noted. “We work closely with Baltimore County police to ensure that we can promptly respond to any potential safety concerns, and it is essential that we all work together to maintain a safe and welcoming environment for all Kenwood High School students and staff.”

This comes at a time when AI companies like OpenAI are reviewing system regulations, and figures like Meghan Markle and Prince Harry are petitioning to ban advanced “superintelligence” systems that are expected to surpass human cognitive ability, per Forbes.

“To safely advance toward superintelligence, we must scientifically determine how to design AI systems that are fundamentally incapable of harming people, whether through misalignment or malicious use,” UC Berkley professor Stuart Russell told the outlet. “We also need to make sure the public has a much stronger say in decisions that will shape our collective future.”

Share

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0